AI and You: White House Preps Executive Order, the Beatles Revive Lost Lennon Song

Get up to speed on the rapidly evolving world of AI with our roundup of the week's developments.

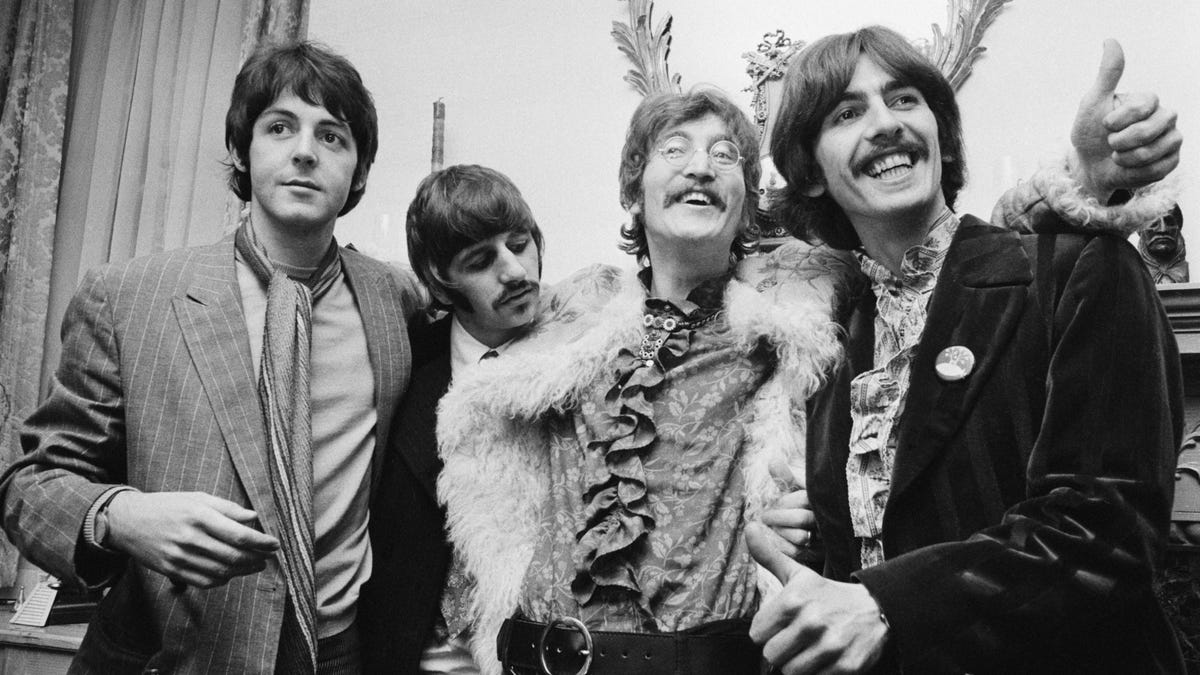

The Beatles at the press launch for their album Sergeant Pepper's Lonely Hearts Club Band in 1967.

Those waiting to see what the Biden administration is going to say about AI regulation can expect a very long read on Monday. That's when the White House is due to release its AI executive order -- just two days before the AI Safety Summit, an international gathering that's being held in Britain, according to the Washington Post.

"The sweeping order would leverage the US government's role as a top technology customer by requiring advanced AI models to undergo assessments before they can be used by federal workers," the Post reported, citing unnamed sources. "Recent rapid advances in artificial intelligence have raised the stakes, as the launch of ChatGPT and other generative AI tools has accelerated a global movement to regulate US tech giants. Policymakers around the world are increasingly worried that AI could supercharge long-running concerns about tech's impact on jobs, surveillance and democracy, especially ahead of a critical year for elections around the globe."

While US lawmakers are still working to develop bipartisan legislation on the use of AI, the European Union is expected to offer up its EU AI Act to protect consumers by year's end.

There are many opinions about what and how much regulation the US and other governments should create to oversee AI tech, particularly generative or conversational AI like ChatGPT. TechNet, a bipartisan lobbying group for the tech industry, released on Friday a five-page Federal AI Policy Framework outlining the policies it sees as necessary to regulate AI "while allowing America to maintain its global AI leadership." Meanwhile, AI thought leaders, including some who are considered the "godfathers" of AI like ex-Googler Geoffrey Hinton and Yoshua Bengio, released an open letter on Oct. 16 calling on governments to manage AI risks.

TechNet, whose members include Amazon, Apple, Box, Cisco, Dell, eBay, Google, HP, Meta, Samsung, Verizon and Zoom, is calling for, among other things:

- Protecting consumers' personal information.

- Appointing a central coordinator for the federal government's development, deployment and use of AI systems.

- Disclosing to users when content is created using generative AI.

- Adopting measures to identify, track and mitigate unintended bias and discrimination.

"Our AI policy recommendations are the result of our member companies' decades of knowledge and experience," TechNet CEO Linda Moore said in a statement. The group also launched a $25 million public information campaign called AI For America to "promote AI's current and future benefits and educate and inform Americans on how AI is already improving their lives, growing our economy, and keeping us safe."

Meanwhile, Hinton, Bengio and 22 other researchers, signed an open letter called Managing AI Risks in an Era of Rapid Progress, calling on governments to put regulations in place to manage AI.

"If managed carefully and distributed fairly, advanced AI systems could help humanity cure diseases, elevate living standards and protect our ecosystems. The opportunities AI offers are immense," the group wrote in their seven-page assessment. "But alongside advanced AI capabilities come large-scale risks that we are not on track to handle well. Humanity is pouring vast resources into making AI systems more powerful, but far less into safety and mitigating harms. For AI to be a boon, we must reorient; pushing AI capabilities alone is not enough."

They're asking for governments to require:

- AI model registration, whistleblower protections, incident reporting, and monitoring of model development and supercomputer usage.

- Access to advanced AI systems before deployment to evaluate them for dangerous capabilities such as autonomous self-replication, breaking into computer systems or making pandemic pathogens widely accessible.

When it comes to AI regulation, stay tuned.

Here are the other doings in AI worth your attention.

One last 'new' tune from The Beatles

The other big news coming next week: the "last new Beatles song." The track, called Now and Then, will be released Nov. 2 and is made possible with help from AI, The Beatles said in an Instagram post.

It's "from the same batch of unreleased demos written and sung by the late John Lennon, "which were taken by his former bandmates to construct the songs 'Free As a Bird' and 'Real Love,' released in the mid-1990s," according to the Associated Press. While George Harrison, Paul McCartney and Ringo Starr worked on Now and Then in the mid-'90s, they weren't able to finish the song for technical reasons.

Four decades after it was initially recorded, Now and Then got an AI boost came from director Peter Jackson, who put together the 2021 documentary Beatles: Get Back using original footage from the recording session in which the Fab Four produced the album Let It Be.

Jackson was able to separate Lennon's original vocals from the late 1970s recording. "The much clearer vocals allowed McCartney and Starr to complete the track last year," the AP said, noting that. "There it was, John's voice, crystal clear," McCartney said in the announcement, according to the AP. "It's quite emotional. And we all play on it, it's a genuine Beatles recording. In 2023, to still be working on Beatles music, and about to release a new song the public haven't heard, I think it's quite an exciting thing."

There's actually a lot going on in Now and Then.

"The new single contains guitar that Harrison had recorded nearly three decades ago, a new drum part by Starr, with McCartney's bass, piano and a slide guitar solo he added as a tribute to Harrison, who died in 2001. McCartney and Starr sang backup. McCartney also added a string arrangement written with the help of Giles Martin, son of the late Beatles producer George Martin," the AP reported. They weaved in backing vocals from the original Beatles recordings of Here, There and Everywhere, Eleanor Rigby and Because.

The day before the new song is released, a 12-minute film that tells the story behind Now and Then will be shared. The trailer is here.

Continuing to follow the AI money trail

Tech earnings this past week included results from Microsoft, Google and Meta, which all talked up their AI initiatives. But what analysts took note of was how Microsoft's cloud business got a boost from gen AI while Google's cloud computing business fared less well. For the record, Microsoft is a big investor ($13 billion) in Open AI and its ChatGPT, which helps powers its Bing search business.

"The companies' divergent fortunes came in the cloud computing market, which involves delivering IT services to customers over the internet. Microsoft reported an unexpected rebound in growth in its cloud computing platform, Azure, after a year in which many customers have been squeezing their cloud spending," The Financial Times noted. "Meanwhile, growth in Google's cloud computing division sagged to 22%."

The TL;DR: "Microsoft was certainly in front of AI faster and they're monetising it well," Brent Thill, an analyst at Jefferies, told the FT. "Google struggled."

Meanwhile, Meta topped analysts' forecasts for sales and profit and said 2024 will be a big year for AI at the company. Meta in September introduced character-based AI personalities, with CEO Mark Zuckerberg saying on the earnings call, "We think that there are going to be a lot of different AIs" users will be able to interact with.

"We're designing these to make it so that they can help facilitate and encourage interactions between people and make things more fun by making it so you can drop in some of these AIs into group chats and things like that just to make the experiences more engaging," Zuckerberg said. "The AIs also have profiles in Instagram and Facebook and can produce content and over time, we're going to be able to interact with each other. And I think that's going to be an interesting dynamic and an interesting, almost a new kind of medium and art form."

New? Yes. Art form? I'll wait and see before agreeing to that.

The UN wants to understand the dangers of AI

Want to solve world problems? Start a committee. At least that's what United Nations Secretary-General António Guterres did Thursday when he announced a new interdisciplinary advisory board that will bring together up to 38 experts from around the world to talk about how AI can be "governed for the common good."

"Globally coordinated AI governance is the only way to harness AI for humanity, while addressing its risks and uncertainties, as AI-related applications, algorithms, computing capacity and expertise become more widespread internationally," the United Nations said.

James Manyika, president for research, technology and society at Google, is co-chair of the group, which includes reps from Microsoft, Sony, OpenAI, numerous governments, research foundations and at least one digital anthropologist.

The new New Coke?

Coca Cola has created a limited-edition version of its popular soft drink called Y3000 -- it's a reference to the future and the year 3000 -- by asking humans to work with an AI help create both the taste and the bottle design.

"Created to show us an optimistic vision of what's to come, where humanity and technology are more connected than ever," the company said. "Coca‑Cola Y3000 Zero Sugar was co-created with human and artificial intelligence by understanding how fans envision the future through emotions, aspirations, colors, flavors and more."

As for the bottle, "the design showcases liquid in a morphing, evolving state, communicated through form and color changes that emphasize a positive future. A light-toned color palette featuring violet, magenta and cyan against a silver base gives a futuristic feel. The iconic Spencerian Script features a connected matrix with fluid dot clusters that merge to represent the human connections of our future planet."

Okie doke.

Anyway, the sugar-free soft drink is available in the US, Canada, China, Europe and Africa. If anyone tries it, let me know.

Forget co-pilot. Is AI akin to a loom, slide rule or crane?

At the Bloomberg Technology Summit this past week, the head of the media organization's venture capital division, Roy Bahat, compared the arrival of AI to three sets of technology: looms, slide rules and cranes. Here's how he described them

- "A loom is designed to replace a person. The same way the Luddites fought against the introduction of the spinning loom."

- A slide rule "assists a person in making a calculation. It speeds you up, allows you to be more accurate and make fewer mistakes."

- "A crane allows a human being to do something that, but for the existence of that crane, they would not be able to do. There's no amount of time that a human can spend to lift a steel beam five stories into the air."

So which is AI? Bloomberg reporter Alex Webb offers this conclusion: "As companies, lawmakers and consumers alike try to work out where to draw the line in terms of what they think is acceptable for AI, this framework is a good starting point. It's just not clear to filmmakers, musicians or anyone else which of these three tools AI will be to their trades."

Agreed, though I'm learning toward crane.

AI terms of the week: frontier AI and narrow AI

This week I'm offering two terms that aim to define the scope of an AI system. The definition for frontier AI is pulled from the glossary of terms provided by the EU's AI Safety Summit. That stands alongside narrow AI, which is also sometimes called Weak AI, according to "50 AI terms every beginner should know" from Telus International.

Frontier AI: AI that is at "at the cutting edge of technological advancement -- therefore offering the most opportunities but also presenting new risks. It refers to highly capable general-purpose AI models, most often foundation models, that can perform a wide variety of tasks and match or exceed the capabilities present in today's most advanced models. It can then enable narrow use cases."

Weak or narrow AI: This is a model that has a set range of skills and focuses on one particular set of tasks. Most AI currently in use is weak AI, unable to learn or perform tasks outside of its specialist skill set.

Editors' note: CNET is using an AI engine to help create some stories. For more, see this post.

Computing Guides

Laptops

Desktops & Monitors

Computer Accessories

Photography

Tablets & E-Readers

3D Printers